🤖The Next Internet: Agentic Web 4.0

The Billion Dollar Infrastructure Transformation in Agentic Web

We're witnessing the early stages of a transformation as profound as the web's emergence—but this time, the collaborative proxy between AI system execution and human intention is driving the shift. Microsoft's recent moves toward the agentic web, announced at Build 2025, exemplify this evolution. The company has integrated over 50 AI agent tools across various platforms, including GitHub, Azure, Microsoft 365, and Windows. Additionally, NLWeb (Natural Language Web) has emerged as a transformative toolset designed to convert traditional web interfaces into agent-readable, structured environments.

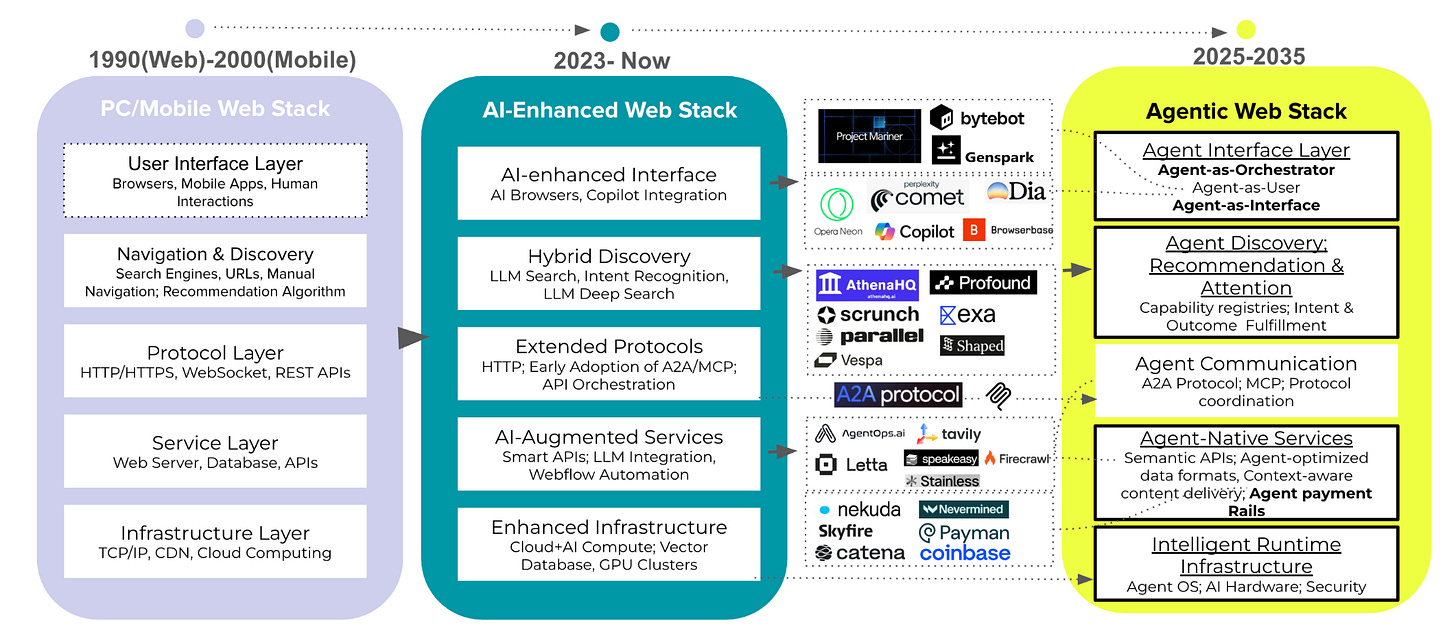

The progression from a human-navigated web through an agent-assisted ecosystem toward full automation, where autonomous AI agents and agent systems collaborate to reason, plan, and execute goals directly. This transition requires a fundamental reconsideration of core web and mobile components, including interfaces, communication protocols, information storage, retrieval, and exchange mechanisms, indexing, search, and recommender systems, as well as the underlying economic exchange models from the previous era—all of which must be reimagined for this new paradigm of intelligent, goal-directed computation.

The Inception and Revolution of the Web

To understand the magnitude of what's coming, consider how the web began. In 1989, Tim Berners-Lee created the World Wide Web not as a grand vision of global transformation, but as a practical solution for scientists at CERN to share information more efficiently. The first website, published in December 1990, was simple—just text explaining what the web was and how to use it.

That humble beginning evolved through distinct phases, each marked by crucial technical breakthroughs that redefined how we interact with information. Static document sharing in the 1990s operated through linear, hierarchical navigation, where users invested significant time and effort in locating information through directory structures. This era was revolutionized by Latent Semantic Indexing, which introduced a paradigm shift by using singular value decomposition to capture latent semantic relationships between terms and documents, enabling search engines to understand conceptual similarities beyond exact keyword matches and address issues like synonymy and polysemy. The subsequent introduction of the PageRank algorithm pioneered the concept of "link-based voting" by evaluating the importance and authority of web pages through their hyperlink structures, fundamentally transforming how information was discovered and ranked.

The interactive Web 2.0 era of social media and cloud services in the 2000s then gave way to today's mobile-first web, driven by advances in recommender system architectures. The introduction of attention mechanisms and Transformer architectures marked a significant advancement in sequential recommendation, enabling models to capture long-range dependencies and complex interaction patterns. Contemporary developments have focused on attention-based models and self-supervised learning approaches optimized for mobile contexts, creating the personalized, context-aware experiences we now take for granted.

Each transformation fundamentally rewrote the rules of digital interaction, commerce, and information access through these technical innovations. Now we stand at the threshold of the next revolution—one that will be just as transformative, but driven by autonomous AI machine-use rather than human users, requiring entirely new paradigms for retrieval, recommendation, and interaction that surpass even these foundational breakthroughs.

Transformation in the Web architecture

Every stack of transformation in the next web

Today's web stack optimizes for human interaction at every layer. Browsers render visual interfaces for human eyes and attention, search engines return lists for human interpretation, and protocols like HTTP facilitate request-response patterns between humans and servers. This architecture has served us well for three decades, but it creates inherent friction and gaps when AI agents attempt to navigate systems designed for human cognition. We're currently in the transitional phase, where AI capabilities augment but don't replace human-centric design. AI-enhanced interfaces like Copilot integration provide intelligent assistance, while hybrid discovery systems combine traditional search with LLM-powered intent recognition. Extended protocols begin supporting early A2A and MCP interactions, and AI-augmented services offer smart APIs alongside traditional interfaces.

This phase represents the bridge between worlds—maintaining backward compatibility with human users while laying the groundwork for agent-native interactions.

The fully realized agentic web represents a complete architectural inversion. At the interface layer, agent-as-orchestrators will replace browsers, coordinating bipartite AI agents across services to accomplish complex goals. Discovery systems transform from search engines into capability registries where agents can identify and negotiate with services that meet their specific requirements.

The Obsolescence of Visual Interfaces

Web browsers are fundamentally human-centric tools that render visual interfaces and respond to human input, while the emerging agentic web operates on entirely different principles. Instead of following static links, intelligent AI agents navigate through semantic relationships, discovering resources and forming dynamic connections based on context and relevance rather than proximity.

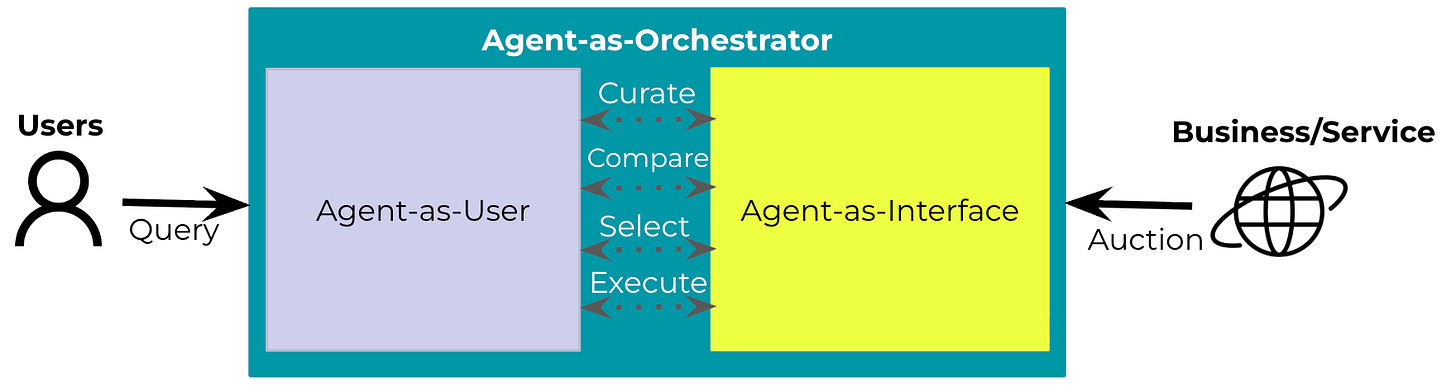

This transformation manifests through two distinct but converging interfaces: Agent-as-Interface and Agent-as-User. Each represents a different philosophy about how machine intelligence would mediate our relationship with web systems.

Agent-as-Interface positions AI agents as intermediaries between human intent and machine execution. These systems operate through API-based interactions—structured communications that offer speed, reliability, and predictable outcomes. When you ask an agent to book a flight, it communicates directly with airline reservation systems through well-defined protocols, bypassing consumer interfaces. This approach delivers computational efficiency and robust error handling but creates dependencies on existing infrastructure.

Agent-as-User teaches machines to interact with the web exactly like humans do. Through computer vision and GUI-level automation, these agents parse visual interfaces, calculate coordinates, and execute precise interactions on elements designed for human use. They possess universal compatibility that API-based systems cannot match.

Agent-as-Orchestrator converges to create tomorrow's "web interface"—sophisticated coordination systems that orchestrate multiple AI agents across distributed services to accomplish complex, multi-step tasks. These AI orchestrators will mediate interactions between agent users and agent interfaces, intelligently filtering and contextualizing information exchange. Consider a scenario where an agent user requires specific data: the orchestrator ensures that agent interfaces expose only the relevant, necessary information while maintaining privacy and efficiency. The orchestrator functions as both interface and user from each perspective, seamlessly taking requests, processing context, distributing subtasks, and executing coordinated actions across the agent network. For human users, this creates hyper-personalized visual experiences where agent orchestrators fetch structured data and dynamically render customized interfaces through standardized protocols, transforming raw information into contextually optimized presentations tailored to individual needs and preferences.

From Search to Agent Intent and Outcome Fulfillment

The agentic web will also fundamentally reimagine how digital resources are discovered, evaluated, and orchestrated. Traditional search engines index static content, but agentic systems must catalog dynamic capabilities—mapping not just what services exist, but how they perform, cooperate, and can be composed into complex workflows. Webpage metadata evolves beyond simple descriptors to include rich, actionable schemas detailing API specifications, trust credentials, performance benchmarks, and negotiation protocols. Search engines will transform into sophisticated orchestrators that don't merely retrieve relevant agents but actively compose and coordinate multi-agent workflows to fulfill complex delegated tasks.

This evolution will create entirely new ranking paradigms. While PageRank once measured authority through link popularity, agentic ranking algorithms evaluate cooperation success rates and complesion rate, responsiveness, and contribution to collaborative workflows. Services now compete not for human clicks but for "agent attention"—the limited cognitive resources of autonomous systems executing sophisticated tasks. This competition drives the emergence of agent-oriented advertising infrastructure, including recommendation engines, capability reranking systems, inter-agent referral networks, and auction-based visibility mechanisms.

Today's web economy largely operates on advertising models that capture human attention to sell products and services. The result for discovery and recommendation in the agentic web will be a profound architectural and economic restructuring of the web itself. Resources are allocated through attention-based competition among agents, creating market dynamics where services optimize for machine discoverability rather than human engagement. The agentic web shifts toward outcome-based economics, where agents pay for successful task completion rather than page views or click-through rates.

Instead of bidding for ad placement, services compete on their ability to help agents achieve goals efficiently and effectively. A calendar service succeeds not by displaying advertisements, but by seamlessly integrating with other agents to coordinate complex scheduling across multiple people and systems.

Changing Information Structures and Agent Communication

The structural transformation of the Agentic Web is not limited to the role of agents as users or interfaces; it also extends to how information is stored (in-model versus in-document), linked (semantic versus hyperlink), and accessed (agent-driven versus search-driven). Rather than relying on hyperlinks, agents discover resources, services, and other agents through semantic discovery and adaptive integration

The web's current foundation—HTTP/HTTPS—was designed for simple request-response interactions between humans and servers. Future communication protocols must support semantic-level interactions, enabling agents to share not just data but meaning, context, and intent.

Emerging protocols like the Model Context Protocol (MCP), open-sourced by Anthropic in 2024, and Google's Agent2Agent protocol (A2A) represent early standardization efforts. These protocols enable agents to exchange not just data but intentions, negotiate capabilities, and maintain context across complex multi-step interactions. Companies that provide MCP managed services and gateways supporting at-scale MCP server deployment and maintenance represent the next critical infrastructure layer that can unlock broader adoption. This will catalyze an entirely new ecosystem encompassing MCP identity management, gateway optimization, and observability platforms—creating a comprehensive development environment for agent-native applications.

Agent-native Services

In the fourth layer of web services, as we transition from the client-to-server model, agent orchestrators will become the key routing middle layer that transforms the entire stack. Web servers will evolve into agent frameworks, databases will be replaced by networks of cross-agent billing ledgers, and APIs will become demand skill and intent vector mappers. This transformation will create entirely new categories of infrastructure companies, much like the transition from mainframes to client-server to cloud computing. The biggest opportunities lie in building the economic rails that enable autonomous agents to transact value—imagine Stripe for agent-to-agent payments, or AWS for distributed agent compute, but designed from the ground up for intelligent, autonomous systems rather than retrofitted from human-centric architectures.

At the technical level, agent-native services fundamentally differ from traditional web services by operating through semantic interfaces rather than rigid API contracts, maintaining persistent context and memory across interactions, and incorporating economic decision-making directly into their execution logic. Instead of stateless HTTP endpoints that simply process requests, agent-native services use the demand skill vector mapper to dynamically interpret intent through natural language or behavioral signals, automatically route tasks through the real-time task router based on current network conditions and agent capabilities, and continuously negotiate resource usage and costs through the cross-agent billing ledger. This means an agent-native e-commerce service wouldn't just process "add item to cart" API calls, but could understand "find me the best sustainable running shoes under $150" and autonomously coordinate with inventory agents, pricing agents, and logistics agents to fulfill that intent while optimizing for cost, speed, and user preferences in real-time.

Companies that focus on agent orchestration platforms, semantic vectorization tooling, and industry-specific agent networks will capture the most value, particularly those solving the cross-agent billing and trust problems that traditional payment and cloud infrastructure companies aren't positioned to address. The winners will be those who build agent-native solutions that treat intelligence, autonomy, and economic coordination as first-class architectural concerns rather than afterthoughts bolted onto existing systems.

The Opportunities Ahead

At the foundational level, infrastructure companies that successfully architect the protocols, orchestration platforms, and security frameworks for autonomous agent operations will capture disproportionate value by becoming the essential utilities of this new paradigm—much as Amazon Web Services and Cloudflare became indispensable to the current internet, these agent infrastructure providers will form the invisible backbone that enables billions of autonomous interactions. Simultaneously, every existing web service company faces an existential inflection point where they must either fundamentally redesign their offerings as agent-native services with rich semantic metadata, clear capability advertisements, and flexible integration patterns, or risk being bypassed by competitors who build agent-first experiences from the ground up. Perhaps most intriguingly, entirely new service categories will crystallize around the unique challenges of autonomous agent ecosystems—from sophisticated behavior monitoring and debugging platforms that can untangle complex multi-agent interaction chains, to cross-agent identity and reputation systems that establish trust without human intervention, to intelligent capability matching and discovery platforms that enable agents to dynamically find and integrate optimal services for their tasks. These emerging categories represent greenfield opportunities where startups can define entirely new markets rather than competing in established ones, creating the potential for category-defining companies that will shape how autonomous systems interact, transact, and evolve together.

The agentic web's distributed, autonomous nature also creates unprecedented security and web safety challenges that represent massive opportunities for startups to build entirely new categories of defense infrastructure. Privacy and personal data leakage become exponentially more complex when agents autonomously share sensitive data across networks without human oversight, requiring novel zero-knowledge proof systems and federated learning frameworks that can verify agent behavior without exposing underlying data. Machine and agent identity control demands sophisticated authentication and authorization systems that can establish trust between autonomous entities that may never have interacted before, moving beyond traditional PKI to reputation-based identity networks and behavioral biometrics for agents.

The inference and reasoning capabilities of agents introduce new attack vectors where adversaries can manipulate agent decision-making through prompt injection, adversarial inputs, or reasoning corruption, creating opportunities for companies building agent-specific intrusion detection, reasoning verification systems, and semantic firewall technologies. Agent guardrails and observability require real-time monitoring of autonomous decision chains, behavioral anomaly detection, and explainable AI systems that can audit agent actions across complex multi-agent workflows, while safety and security defense must evolve from protecting static endpoints to defending dynamic, self-modifying agent behaviors that can rapidly adapt and potentially exploit unforeseen vulnerabilities. These challenges are fundamentally different from traditional cybersecurity because agents operate with intentionality and autonomy, meaning security solutions must be equally intelligent and adaptive rather than rule-based, creating a greenfield opportunity for startups to define entirely new security paradigms built for an autonomous, intelligent web.

It's an entirely new internet and web infrastructure being built with AI agents, and in the following blog posts, I will dig into each layer of the technology stack and new opportunities. If you're building in this space, I also organize regular researcher meetups to share insights on how the ecosystem evolves—please reach out to iris.sun@500.co.*

*Disclaimer: All articles and posts on this site are my personal views and opinions and do not reflect the views of 500 Global.

well said! this whole future underscores the importance of open source ai models.

Interesting info about the next internet and Agentic AI. Thanks for sharing this valuable article