Primer for Web Assembly-The Dawn of a New Epoch for Cloud Computing

A non-technical primer for WASM

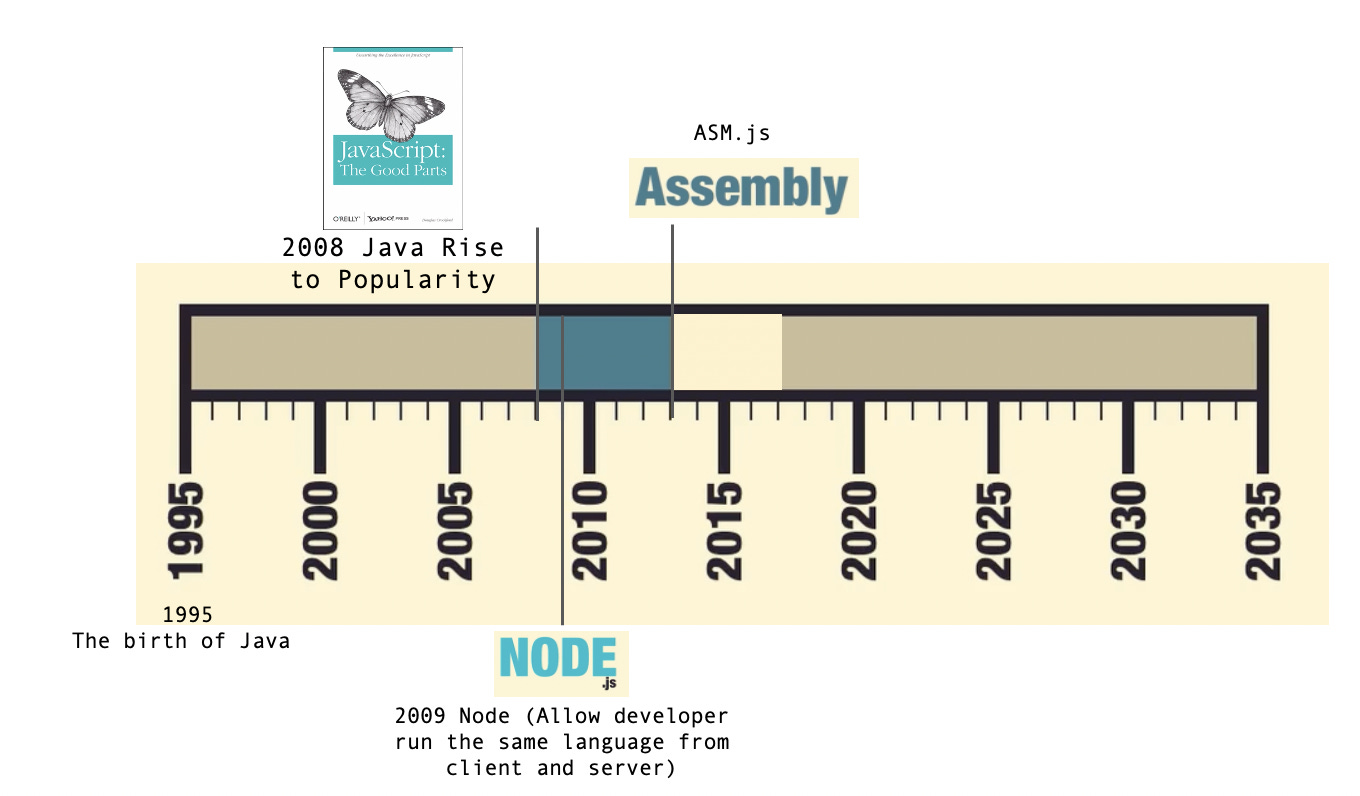

The birth and adoption of JavaScript

Before we touch on anything about assembly or the white-hot WebAssembly (WASM), let’s discuss the cliché that “JavaScript has become the assembly language of the Web.” If JavaScript sounds not too familiar to you, simply put it, JavaScript is the programming language for the Web. The developer wants to instruct the computer on what to do to achieve a goal, but the two parties speak different languages. JavaScript and other high-level programming languages are best understood as intermediary languages. The JavaScript engine transforms human cognition (and language) into something the machine can parse and understand.

Compilers and interpreters are two ways of translating human language into machine language in programming. On the one hand, compilers do not translate the language to machines on the fly. It takes time to set up and requires a compilation step at the beginning. Interpreters, on the other hand, translate line-by-line and cannot do a lot optimizations as compilers can. The interpreter was first adopted by JavaScript, but it was slow as a lot of repetitive translations were involved.

The emergence of just-in-time compilers (JIT) made JavaScript much faster and later drove its mass adoption as THE web language. JIT combines the best of interpreters and compilers by adding monitors (or profilers) that watch the code as it runs and eliminates the repetitive run time for the same line of code. When the same lines of code run a few times, this is called ‘warm code,’ and if it runs a lot, it is called ‘hot code.’ When the function runs warmer, JIT will send the lines of function to be compiled to a “stub” and then stored such that if the monitors later detect the execution is hitting the same lines of code again, with the same variable types, it will put out the compiled version. This process is called ‘compile and optimize.’

To make the version faster, the optimizing compiler must make assumptions and validate these assumptions to make judgments. In other words, the compiled code needs to be checked and validated before it runs. When the JIT detects that it made wrong assumptions, it will deoptimize the code in a process referred to as ‘deoptimization.’ Even with these major advancements with JIT, the performance of JavaScript can still be unpredictable and requires not only long runtimes for optimization and deoptimization, but also significant memory for bookkeeping, optimization, and recovery.

Let us now return to the first concept: assembly. Assembly is a low-level programming language developed in the 1980s that is designed to simplify the instructions that embed into the computer CPU. Put differently, it is a human abstraction code that frees programmers from having to count in binary for machines to understand (an accessible and more lively explanation by Lin Clark here). Assembly language is still in use today and can be viewed as the precursor to WASM, but it remains slow, tedious, and lacks portability. Converting assembly language is non-trivial work, and despite advances in automation, the legacy system and software that must be used to tackle directly specific hardware means that assembly just doesn’t have any portability. Assembly language was resurrected via JavaScript as asm.js, a subset of JavaScript that provides a sandboxed virtual machine for such languages as C/C++ that enables the much faster performance of C/C++ programs than natively compiled code. In some specific use cases, most notably on mobile devices, downloading and compiling asm.js can still be very slow. Instead of compiling bulky source code, why not use the machine's native code? Enter WebAssembly (WASM).

The Dawn of a New Epoch for Cloud Computing

WebAssembly (WASM) and the Third Wave of Cloud Computing

Image Credit: WebAssembly Landscape 2022

WASM was announced in 2015, and the initial MVP was released in 2017. WASM not only forever changed how developers code for web applications, but also became the new open standard that enabled web browsers to run binary code and fostered the paradigm shift into cloud computing.

In short, WebAssembly is a lightweight, load-time-efficient, and secure virtual machine in a binary format that can compile codes originally written in C/C++/Rust/Python/Ruby at near-native speed. It started from the browser side, but WASM has transcended the browser and continues to bring new levels of primitives to the server side. It will keep rising through the cloud native ecosystem.

To add a bit more color to the former waves of cloud computing and to put cloud computing as simply as we can: it is an environment that provides all facilities for workloads to be executed strategically and successfully. Two of the other key technologies that support cloud computing are:

Virtual machine (VM): virtualizes the entire operating system, the most heavyweight class of cloud computing with a startup time measured in minutes and image sizes measured in gigabits.

Containers technology: makes it possible to bring services and servers to the cloud ecosystem that are portable and configurable.

To make the third wave of cloud computing happen, WASM will need to bring improvements by a factor of ten to cost and runtime efficiency for workloads that are running on the container today. And as we have seen today, WASM has been proven to run microservices 100X faster in terms of memory footprint and startup time.

What Makes WASM Great for the Cloud

Insatiable Quest for Performance

WASM’s low-level binary format is designed to conduct high-performance computation at near-native speeds. Whereas JavaScript must be converted to bytecode (an instruction set designed for efficient execution by a software interpreter that compiles through parsing, optimizing, deoptimizing, executing, etc.), WASM has been statically typed such that the optimization happens earlier in the compilation process. The resulting binary files are significantly smaller than JavaScript’s, hence the much faster run time.

In a nutshell, WASM can improve the memory footprint and start-up time for the browser by orders of magnitude. The same featured technology applies to the cloud, where the fairly small binary data move between networks and the fast start-up time makes WASM more efficient for all these workloads runtimes on containers (serverless functions, microservices, etc.).

Exceptional Portability for Cross-platform and Cross-architecture Use

Gone are the days when people would tolerate websites saying they cannot access the page unless they run it with Safari. WASM is developed to bridge the cross-platform and cross-architecture and the format of WASM allows it to be written in different languages and formats. WASM is a universal standard format that allows users to bring third-party capabilities without risking a system crashing. In the cloud setting, people using Arm, Linux, Intel, Windows, or any bespoken operation systems, don’t want to rebuild the binary or image. They want to compile it at once and push it to the cloud according to whatever schedule the cloud has. Thus, WASM is a good match for the cloud because it is virtualized and can be applied in any platform or environment.

Unexploitable and Natively Secured by Sandbox

Security in web browsers is paramount and likewise for the cloud. Unlike most languages' runtimes functions that have addresses attached to them, WASM runs the code in a sandbox environment (i.e., an isolated testing environment that runs programs without affecting the platform, system, or applications) and encapsulates the program memory in a safe zone that ensures nothing will go out of it.

Software supply chain security has been an ongoing trend and concern for a lot of companies, and finding ways to mitigate all vulnerabilities across hundreds of dependencies will be difficult. What is more, cloud vendors that have a federal contract must invest a tremendous amount of work to bring observability into what is behind each of the microservices. WASM is interesting because it doesn’t have the same vulnerabilities as traditional OS or Linux. A good example here is RLBox, which was developed by Firefox and takes parts of their applications stacks, compiles those to WASM modules, and takes these WASM modules to run them individually as machine code. This mitigates the entire swathe of zero-day vulnerabilities for single monolithic applications.

Why now? Reflections on the Current State of WASM

It has been almost seven years since WASM was announced, and despite some developments, WASM has been primarily applied to browser-side use cases. Why is now the tipping point for WASM to take off in multiple server-side use cases?

Let us consider again the history of Java’s adoption that we mentioned earlier. Java came out in 1995 and stayed there for a long time before gradually being adopted from the browser side. It was not until 2001 that more and more applications on the server side took off for Java. Without penetration from the browser side (i.e., people started learning the language for other projects), it would have been hard to pull off mass adoption from the server side for Java.

The adoption curve of WASM could follow the trail that was blazed by our great Java. At the end of 2019, WASM was officially recognized by the W3C standard along with JavaScript, HTML, and CSS. This laid the foundation for the ultimate migration of WASM from the browser side to the server side. Indeed, this is what has happened. Besides its wide recognition by all the mainstream browsers, computing companies like Cloudflare, Fastly, Netlify, and even AWS have started offering WASM-based functions and plugins.

A bit of Moore’s law can also be applied here because engineering efficiency and developer efficiency were the two most important factors driving WASM’s adoption. For the past decade, no matter how slow Java was, machine efficiency had been constantly improving such that Java’s performance was also improving from the user’s perspective. However, the improvement in machine efficiency has plateaued in the past five years. In today’s native cloud environment, low-level machine native languages like RUST and SWIFT are gaining popularity, but for these languages to deploy into the cloud, they must be isolated by the container. We’re at the point of switching to something that is much lighter and with a much lower memory footprint as compared to containers to improve developer efficiency. Companies can improve runtime performance without adding extra machines, and they’re looking to adopt new technologies to optimize costs and improve performance. (Here’s another great write by Renee Shah who is also convinced that it’s takeoff time for WASM)

Image Credit: WebAssembly: The Future Looks Bright

Whenever we have fundamental changes incoming, we need tooling systems and workflows as flywheels built around them to accelerate the adoption process. We are already starting to see new frameworks: Microsoft’s Blazor client framework has been the most notable one, and companies like Cosmonic and Suborbital are building the application framework for WASM. Compilers, SDKs, and toolchain sets (APIs, GDKs, etc.) are gradually being built. And WASM is still in the early day of its maturation cycle! For example, although some compilers are available to generate WASM binary from some major languages, from the server side, few are fully compatible with the corresponding developer environments. Furthermore, the specifications and such technical capabilities as multithreading, testing tools, registries, orchestration, and observability tools are still work-in-progress or missing. This is where a lot of emerging opportunities will come from.

Where is WASM taking Computing Technology?

The Future of Runtime: Everything Will Become a Plug-in

WASM’s almost complete coverage of popular languages, including C, C++, Go, Ruby, and Rust, and its sandbox-isolated nature make it a good candidate for universal compilation targets. If WASM were to become a default compilation target, later the entire runtime will become a LEGO-like structure that allows extensions and third parties to code on top of current applications without adding overheads or causing downtime. WASM can push people to write applications more like plugins (even serverless/FaaS can become a plugin), and everything would become the extension of the plug-in model. What is more, the LEGO-like WASM-empowered runtime will make it easier to guarantee the runtime at every layer of the WASM stack. For more technical details, you can read about how WASM is becoming the next-gen language runtimes as written by Ben L.Tizer here.

Democratization of Software

There’s another big component for WASM’s migration to the server side that we haven’t yet mentioned: WASI (The WebAssembly System Interface). WASI is the modular interface that allows developers to run WASM outside of the browser, and it has a standardized set of APIs for WASM modules to access such system resources as the filesystem, networking, time, random, etc. One innovation that has been developed with WASI is the components model - a language-neutral approach to building WASM applications. With the component model, we would finally get universal language support & library support. Think about the possibilities for developers who can build many different WASM modules on each other. Now they’re separated by different language ecosystems, but in the future, no matter who and where you are along your skill journey, you will be able to get the best components, libraries, and tools to be able to work with every layer of the stack and all sets of applications will start talking to each other.

Exciting Early Innings: The New Use-Case Landscape for WASM

From the browser side, we’ve seen companies like Shopify (optimize performance-sensitive features like checkout for the user), Figma (optimize browser load), Autodesk (powers the AutoCAD web app that allows user access CAD from the web), and Google Earth (to improve cross-browser access to Google Earth) adopt WASM. All these innovations from the browser side leveraged WASM to build a much better user experience. In the entire cloud computing landscape that embraces WASM and where everything is becoming a plugin, each WASM-empowered stack is democratizing the software stack. Here are some exciting areas for WASM from the client side:

Microservice Clustering & Serverless Compute

Microservices have been the most prevalent architectural and organizational approach for software development. It is composed of small independent services that communicate over well-defined APIs. This architecture doesn’t have many advantages. Rather, it reflects companies’ organizational structures and is owned by small, self-contained teams. Big organizations like Microsoft, Tiktok, and even Elon Musk's newly acquired Twitter, all have already had tens and thousands of microservices.

How to cluster and group these microservices and what tactics should be used have been huge challenges for companies. That’s where WASM comes in and provides a really clean solution. If all these microservices are written in WASM, the start-up time (from several seconds to a few milliseconds to even microseconds) and the memory footprint will also be dramatically reduced from the Linux containers (by as much as GBs for some high compute intensity work like AI/ML inference technical inference to a few MB). That’s what we call scale to zero today for the WASM-enabled new microservice architecture. The new architecture can scale codes all the way from cold to warm, and companies don’t need to maintain hot instances. Startups like SecondState are dedicated to helping customers deploy runtime (you can read even more from Renne Shah here). The trend for microservice started about five years ago and the bulk of companies are still adopting it. Using WASM to cluster microservices could be a strong use case for its future development.

The other big problem for the cloud today is cost. We will still need to run the same amount of computing and memory for specific tasks. WASM can vastly reduce our costs for these cases. We can adopt WASM and use it to expand the cloud-native programming model to such resource-constrained and performance-sensitive environments as the edge cloud, embedded or serverless functions platforms, and blockchains, as well as mobile and IoT devices.

Edge Compute for Data Pipelining & Processing

There is an onslaught of data pipelines (streaming data, event queue, etc.) on the edge, and it requires constant data transformation before input into the database for the query. In the context of web3, Dune Analytic has been solving the pain point of transforming raw real-time time-series data into relational data (e.g., account structure, account balance, and accounts in the smart contract, etc.). If we reflect on web2, old database jargon like ETL could be a good starting point to embed WASM into the existing data framework. Companies in the space could work with database companies to develop user-defined functions (UDF).

Indeed, companies like Singlestore, TIDB, and ScyllaDB have been using WASM to extend their databases with UDF, which enables developers to recycle libraries and push compute into data they do not then have to export to other applications for data processing and transformation. InfinyOn (a TSVC portfolio company) has developed a data streaming engine that allows users to pass their own transformation to other engines and bring arbitrary code run on these systems. And Redpanda deals with data mostly from edges, event logs, and streaming data. All of these can be written in WASM for more efficient data transformation.

Cloud Native Distributed Computing

The variety of application and system architectures, distributed security, and AI/ML deployed on the edge are constantly giving rise to distributed computing. The shifting paradigm in distributed computing is still crippled by public cloud and CPU diversity and compatibility, diverse operating environments, security, and distributed application architecture. WASM’s native performance and portability are positioned well to function in such distributed and varied environments. And we’ve seen Wasmtime optimized for server-side execution and Wasm-micro-runtime for embedded devices. Distributed application runtimes like wasmCloud (developed by Cosmonic), have brought WASM not only to the server but also to the edge. Distributed application runtimes have been embedded into platforms like Envoy as an extensible platform and as a direct cloud-native orchestration target via projects like Krustlet, which was recently accepted into the CNCF Sandbox (here’s another good read).

Takeaways

Even though we have only seen the early innings of WASM and its maturity cycle is just starting, the landscape for WASM is growing and burgeoning every day. WASM is finding a home in every layer of the cloud technology stack and becoming not only the future infrastructure for cloud-native computing by creating LEGO-liked plugins, but also a de facto solution across most languages. WASM will keep revolutionizing serverless/FaaS with its actor and event-driven architecture.

Indeed, WASM is envisioned as the default runtime for the entire software technical stack: applications that all were compiled to WASM, distributed, and networked with WASM filter, admitted by a WASM Open Policy Agent rule, and versioned by a WASM native package manager (people from the client-side run any device on edge device with the expression operate on data are driven by WASM). I’m still in the early days of exploring WASM’s potential and would love to get in touch if you have been in the field for a while or if founders are contributing parts that complete the new strong WASM ecosystem.